No, wait, “Maven is to Ant as Linux From Scratch! is to Ubuntu“…

No, wait, “Maven is to Ant as Linux From Scratch! is to Ubuntu“…

No, no, hold up – “Maven is to Ant as the Vidalia Slice Wizzard is to the Potato Peeler“….

I think Maven is great.

I discovered something really neat-o the other day. The definition of the word Maven:

“an expert or connoisseur.”

Maven is Just That(tm). You don’t have to know anything about building*, you leave it to the Maven. The Expert. The Connoisseur you might say…

It knows how to do so much for you. It has many many tricks up it’s sleeves, and people are teaching it more and more every day! Plus Ant seems to be considered effectively complete and not under much active development. The leaders in the game are moving onto greener pastures.

Dependencies in Control

The main complaint people have is the online thing. That being, you, reasonably, pretty much have to be online for Maven to work reliably.

One fix for this is to host your own Maven repo inside your project directory and commit it to version control. This is effectively the same as having a /libs dir and sticking all your jars in there, except you get all the benefits of Maven’s dependency management. Great!

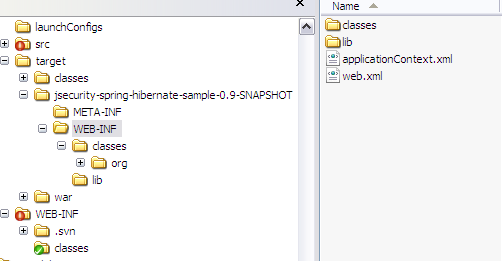

Here’s an example

- run mvn -Dmdep.useRepositoryLayout=true -Dmdep.copyPom=true dependency:copy-dependencies

This creates /target/dependencies with a repo-like layout of all your projects dependencies

- Copy /target/dependencies to something like /libs

- Add to pom.xml the location of repository like so:

<project>

...

<repositories>

<repository>

<releases />

<id>local</id>

<name>local</name>

<url>file:///${basedir}/libs</url>

</repository>

</repositories>

</project>

NB: ${basedir} is required otherwise Maven complains about not having an absolute path.

- commit!

- Note – you can also do this for a multi module project, and have all modules share the same common repository. You should just make sure you run the copy-dependencies command from your parent pom.

- You can also install your custom dependecies into such a repository such as Oracle db drivers using the install-file goal:

mvn install:install-file -Dfile=your-artifact-1.0.jar \

[-DpomFile=your-pom.xml] \

[-DgroupId=org.some.group] \

[-DartifactId=your-artifact] \

[-Dversion=1.0] \

[-Dpackaging=jar] \

[-Dclassifier=sources] \

[-DgeneratePom=true]

[-DcreateChecksum=true]

NB: the easier way to use the install-file goal, is to not use it, try and build your project, then Maven will complain about the missing dependency, and prompt you with instructions on how to use install-file with nearly all the options pre-filled in, according to the dependency description in your POM.

Taking Maven completely Off-Line

The other thing people talk alot about is other build tools that are better than Ant, e.g. Gant, Raven (ruby build scripts for Java) or some other one I heard of recently – ah-ha! found it – Gosling (I’m sure I read a recent article about it somewhere, that pointed to a newer website?) that lets you write your build in Java and then just wraps Ant! Bizzaro.

Another one is that people are concerned about their build tool changing itself over time, and so their build is not necessarily stable and reproducible. I for one love the idea of self upgrading software – hey, it’s one step closer to the end of the world right? Well I for one welcome our build tool overlords. But seriously, I think that the advantage of having the build tool upgrade itself and getting the latest bug fixes and feature updates, outweighs the disadvantage of the build breaking one day. So what? – You take an hour out, or half a day, or even a day, and fix it!

Buut, if you work in a stiff, rigid environment, or work for NASA or the militar or something, then there’s a way around this as well. This is also the suggested best practice for dealing with plugins. (O.k., I remember reading this information somewhere, but it was harder to find again than i thought.)

- Run mvn help:effective-pom -Doutput=effective.pom this produces a list of the plugin versions your project is currently using.

- Open effective.pom and copy the build->pluginManagement section into your pom, optionally deleting the configuration and just keeping the goupid, artifactid and version.

- Make sure your project packages, to test you got the pluginManagement right.

- Rename your local repository to repository.back

- Run mvn dependency:go-offline – this will download all plugins and their dependencies for your project, into a clean repository.

- Move the repository into your project directory.

- Add the project repository to your POM as described above.

- Try running your mvn package with the –offline option and make sure everythings ok.

- Rename your backup from repository.bak back to repository.

- Commit.

- Done! You should be able to now build the project off of a fresh checkout and an empty repository.

- If you’ve gone this far, you may as well also commit the version of Maven your using into your source control as well, in a directory such as /tools/maven.

***

When MDEP-177 is addressed, this will be much easier to do.

On another note, as far as running off-line is concerned, the other really neat-o thing you should definately do if there are more than two of you on location (or if you’re keen to share snapshots easily), is setup a local Maven repository cache/proxy/mirror using Nexus. IMO, don’t bother trying Artefactory or the other one, Nexus is da’ bomb.

Ant, Ivy and Transition

What i don’t think these people seem to appreciate, is that the beauty of Maven is that you don’t write any build logic**! All these other tools don’t really address this problem! Making Ant easier to write, still means you have to write Ant! Yuck! As far as I’m concerned, our job is to further the state of the art of technology, and this means effectively achieving more while doing less! Maven is exactly that.

Ivy is all well and good, but Maven is just so much more. In fact, since Maven does everything Ivy does (to a degree), and Maven can be used from Ant (i.e. so you can integrate Maven’s dependency management into your Ant build instead of using Ivy), I would propose that the Ivy developers stop working Ivy now, and try to bring to Maven’s dependency management system whatever it was they thought they could do better with Ivy. A little competition never hurt anyone though…

If you don’t want to adopt Maven out-right (i.e. if you have a very large project), using the Maven Ant tasks, you could use Maven for your dependency management instead of doing it all manually with Ant or better with Ivy. This is what the JBoss Application Server team have done btw – you can see their source code for hints on how to get started.

Or, you can do as I have done on my last project at IBM, start using Maven entirely for the components you are working on, and integrate the output of it into your legacy Ant build.

How to Integrate Maven into a Legacy Ant multi-component Build

I first created a POM that modelled the non-Maven component that my Maven components wanted to rely on, then you add this phase to your Ant build, to get it to install the POM and it’s artefacts into the local repository. That way the other Maven built components don’t need to think about Ant at all.

<!-- Maven ant task -->

<import file="../common.xml" />

<target name="maven-repo-install" depends="install-parent-pom">

<artifact:dependencies settingsFile="../tools/maven/conf/settings.xml" />

<property name="M2_HOME" value="../tools/maven" />

<artifact:localRepository id="local.repository" path="c:/repository" layout="default" />

<!-- install main pom -->

<artifact:pom id="pom.es" file="pom.xml">

<localRepository refid="local.repository" />

</artifact:pom>

<artifact:install>

<localRepository refid="local.repository" />

<pom refid="pom.es" />

</artifact:install>

</target>

The gotcha with this is that when you call Ant on this build.xml, you need to add the maven-ant-tasks jar to it’s classpath. You can also make a .bat or .sh out of this:

REM // add maven-ant-tasks ant targets

ant -lib ..\tools\maven-ant-tasks-lib %*

In order to make sure your Ant built project’s Maven twin has access to it’s parent if it needs it, add this step as a dependency on your installation:

<!-- setup maven parent -->

<target name="install-parent-pom">

<!-- install pom -->

<maven basedir="../" goal="install" mvnargs="-N" />

</target>

In order to easily call a Maven command from an Ant script, I pilfered this and modified it, from the JBoss Application Server build script – thanks guys!:

<?xml version="1.0" encoding="UTF-8"?>

<project name="common-ant-tasks">

<!-- maven execution target definition -->

<macrodef name="maven">

<attribute name="goal" />

<attribute name="basedir" />

<attribute name="mvnArgs" default="" />

<!--

programRoot should point to the root directory of the oasis project

structure. I.e. the directory which contains setup and tools.

-->

<attribute name="project.root" default="${basedir}/../" />

<element name="args" implicit="true" optional="true" />

<sequential>

<!-- maven location -->

<property name="maven.dir" value="@{project.root}/tools/maven" />

<property name="mvn" value="${maven.dir}/bin/mvn.bat" />

<property name="thirdparty.maven.opts" value="" />

<!-- check mvn exists -->

<available file="${mvn}" property="isFileAvail" />

<fail unless="isFileAvail" message="Maven not found here ${mvn}!" />

<!-- call maven -->

<echo message="Calling mvn command located in ${maven.dir}" />

<echo message=" - from dir: @{basedir}" />

<echo message=" - running Maven goals: @{goal}" />

<echo message=" - running Maven arguments: @{mvnArgs}" />

<java classname="org.codehaus.classworlds.Launcher" fork="true"

dir="@{basedir}" resultproperty="maven.result">

<classpath>

<fileset dir="${maven.dir}/boot">

<include name="*.jar" />

</fileset>

<fileset dir="${maven.dir}/lib">

<include name="*.jar" />

</fileset>

<fileset dir="${maven.dir}/bin">

<include name="*.*" />

</fileset>

</classpath>

<sysproperty key="classworlds.conf" value="${maven.dir}/bin/m2.conf" />

<sysproperty key="maven.home" value="${maven.dir}" />

<arg line="--batch-mode ${thirdparty.maven.opts} -ff @{goal} @{mvnArgs}" />

</java>

<!-- check maven return result -->

<fail message="Unable to build Maven goals. See Maven output for details.">

<condition>

<not>

<equals arg1="${maven.result}" arg2="0" />

</not>

</condition>

</fail>

</sequential>

</macrodef>

</project>

And if you have other legacy Ant built components that want to be able to blindy use Ant to build their dependencies, and you aren’t using Maven Ant tasks in that project, you can wrap the dependent Maven built projects Maven build using this, which uses the above Ant macro definition:

<?xml version="1.0" encoding="UTF-8"?>

<!-- Redirects to Maven to build -->

<project name="anAntBuiltProject" default="install" basedir=".">

<!-- Maven ant task -->

<import file="../common.xml"/>

<target name="install">

<maven basedir="${basedir}" goal="install -N"/>

</target>

</project>

Conclusion

The future’s got to go somewhere, and Maven is a huge innovation in the build department, and is definitely a big leap in the right direction.

In fact, I wouldn’t be surprised if someone migrates the Ant build to Maven 😉 – that’s a joke btw.

*** I haven’t actually tried this yet 😉 Let me know how you get along if you give it a go.

** This is the best case scenario, and for most projects i think it’s true. However, if you get yourself into funky corner cases, sometimes you gotta throw in some plugin-Fu or some embedded ant-Fu to get things just the way you want. Although, i have only had to do this when dealing with the headache of grafting maven onto legacy projects which didn’t have standard build procedures in mind.

* Ok yes, but you have to know how to use Maven. But Maven is a lot easier to use in the long run than Ant fo sure.

You must be logged in to post a comment.